- Home

Community

This forum, visible to all visitors, allows us to discuss general project issues, such as posting or commenting on dissemination events.

The main aim of synergies is to structure meaningful interactions, combining joint-efforts to enhance and optimise the use of resources.

Mapping practices, data, and policies

The CRFS Good Practices is a map platform for the good practices in innovations done within Cities2030 Project.

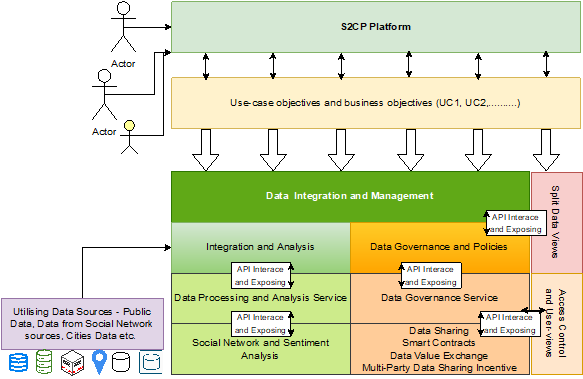

S2CP is a CRFS management platform for data collection, analysis and representation in multiple interfaces.

The “CRFS Intelligence Lab” is an observatory established at Ca’ Foscari University of Venice, Italy to cover urban food policies dynamics and paradigm shift

- News

- CRFS labs

- Results

- Media

- Contact